Geo-harmonizer project implementation plan 2020–2022

Prepared by: OpenGeoHub, CTU in Prague, Terrasigna, mundialis and MultiOne

General principles

Geo-harmonizer: EU-wide automated mapping system for harmonization of Open Data based on FOSS4G and Machine Learning

Programme: CEF Telecom.

- Project summary

- Project partners

- Project team

- System components

- Key development principles

- Data harmonization/production using Machine Learning

- Development principles for web-interfaces

- Data and storage model

- Geographical area of interest

- Design of the data portal functionality and main workflows

- Accessibility

- National support

- Impacts

- General dissemination & user engagement strategy

- Quality assessment of the new produced data layers

- Sustainability

- Activities and tasks

- References

System components

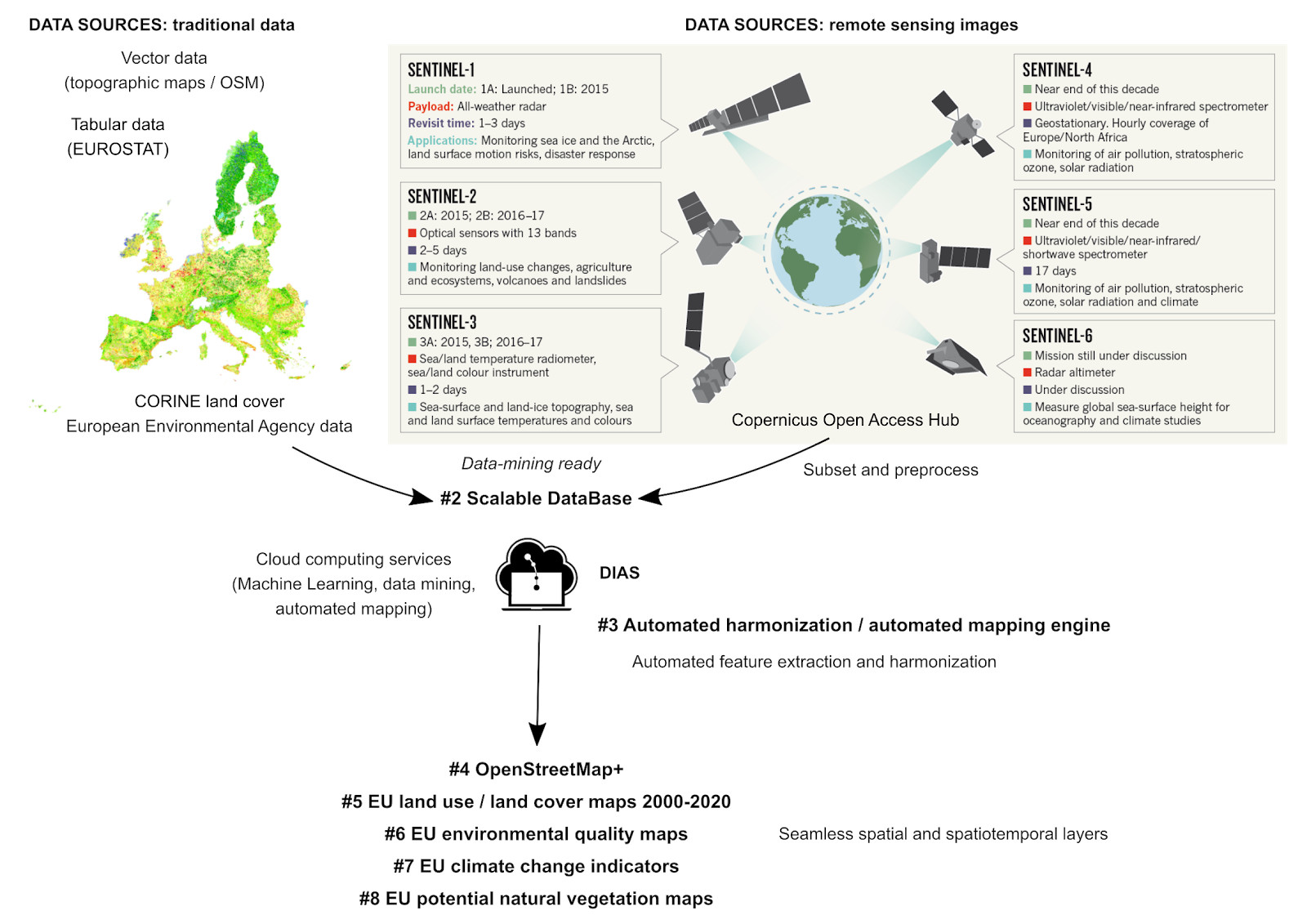

The proposed system will include:

- A data portal/Web app allowing easy access to data catalogs and services,

- A list of generic services (developed with the FOSS4G software stack) for automated mapping/harmonization and merging of administrative, socioeconomic, climatic and environmental data,

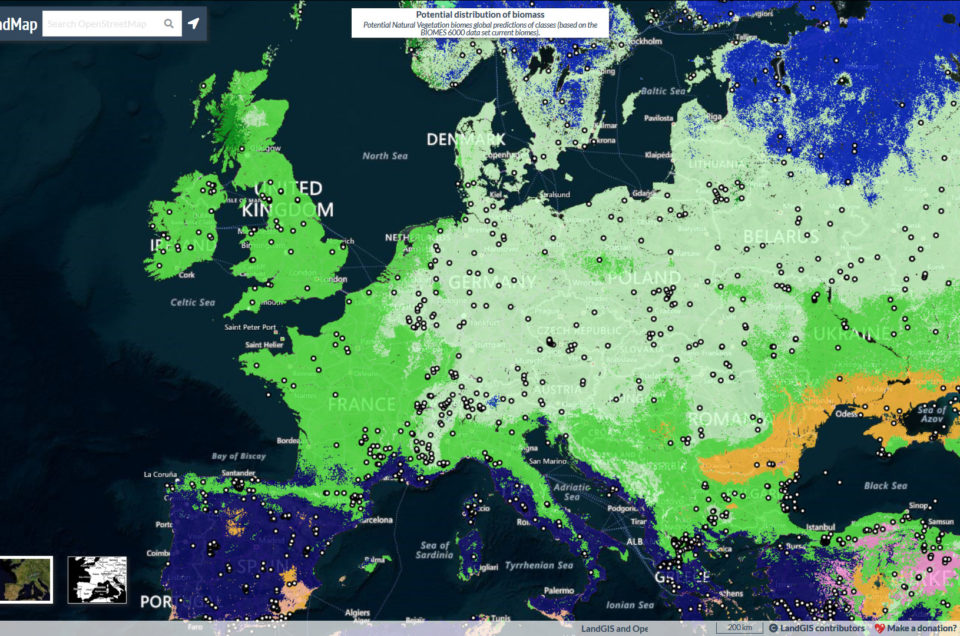

- A list of new, added-value, pan-EU data sets (including an “OpenStreetMap+” — enhanced version of the OpenStreetMap for EU, Land cover time series 2000–2020, environmental quality maps, climate change indicators, potential natural vegetation maps, etc.) generated using automated harmonization services,

- Detailed technical documentation (tutorials and use cases) to allow for further development and reuse of the data, portal, app and services.

Key development principles

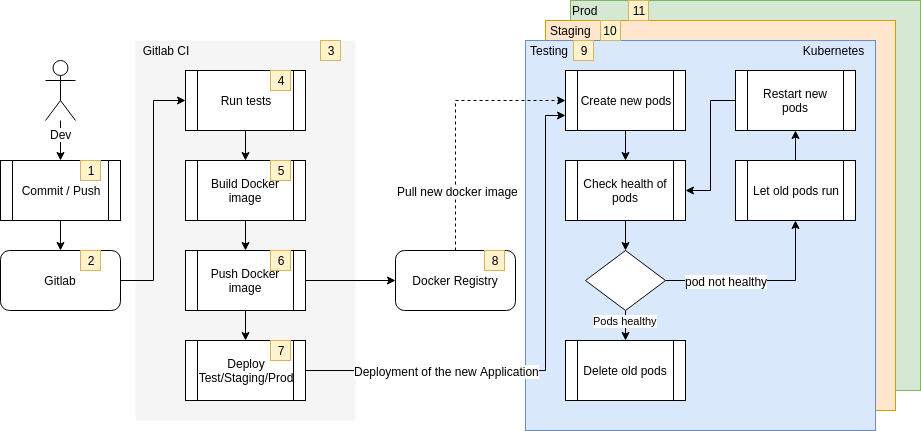

The system development will be based on the following five key principles of producing Digital Service Infrastructure:

- User-satisfaction: we aim at providing Open and diverse access to subsets and whole data sets and which aims at satisfying large communities of users,

- Robustness: the system has to be secure/accessible 24hrs a day without interruptions,

- Version control: data and code once entered always traceable through gitlab.com,

- Cloud-based solutions: serving is optimized to satisfy dynamic traffic,

- Scalable applications: implemented in a way that allows tenfold increase in traffic without re-implementing functionality.

By harmonization we will consider the following groups of harmonization:

- Harmonization of geographical entities (upscaling, downscaling, resolving of edge-problems and cross-border problems) so that seamless geographical layers can be produced,

- Harmonization of variables coming from different sources to the same standard,

- Semantic harmonization allowing for combination of data with different legends and mapping concepts,

- Harmonization of the map styling and metadata standards (Brodeur, 2019),

- Harmonization of data quality i.e. providing close-to-homogenous data quality standards.

We distinguish between 5 principal levels of data:

- Raw data (L0): data sets such as the original L0 satellite image.

- Preprocessed data (L1): data sets where obvious artifacts are removed including any systematic bias.

- Analysis-ready data (L2): data (i.e. data products) which contain almost no artifacts and all biased has been removed and hence can be directly used for spatial analysis.

- Decision-ready data (L3): data products that can be used to support direct unambiguous decisions.

- Decisions (L4): data which can directly be used further in automated pipelines (e.g. turn right on the next crossing).

With Geo-harmonizer we aim at providing categories 3 and 4 data, while we anticipate that the decisions will be served by B2B solutions or similar. We aim at using as much as possible existing Open Source tools for data harmonization (e.g. Bieszczad et al. 2019), and building up on existing experiences (Wiemann & Bernard, 2016; Ronzhin et al., 2019).

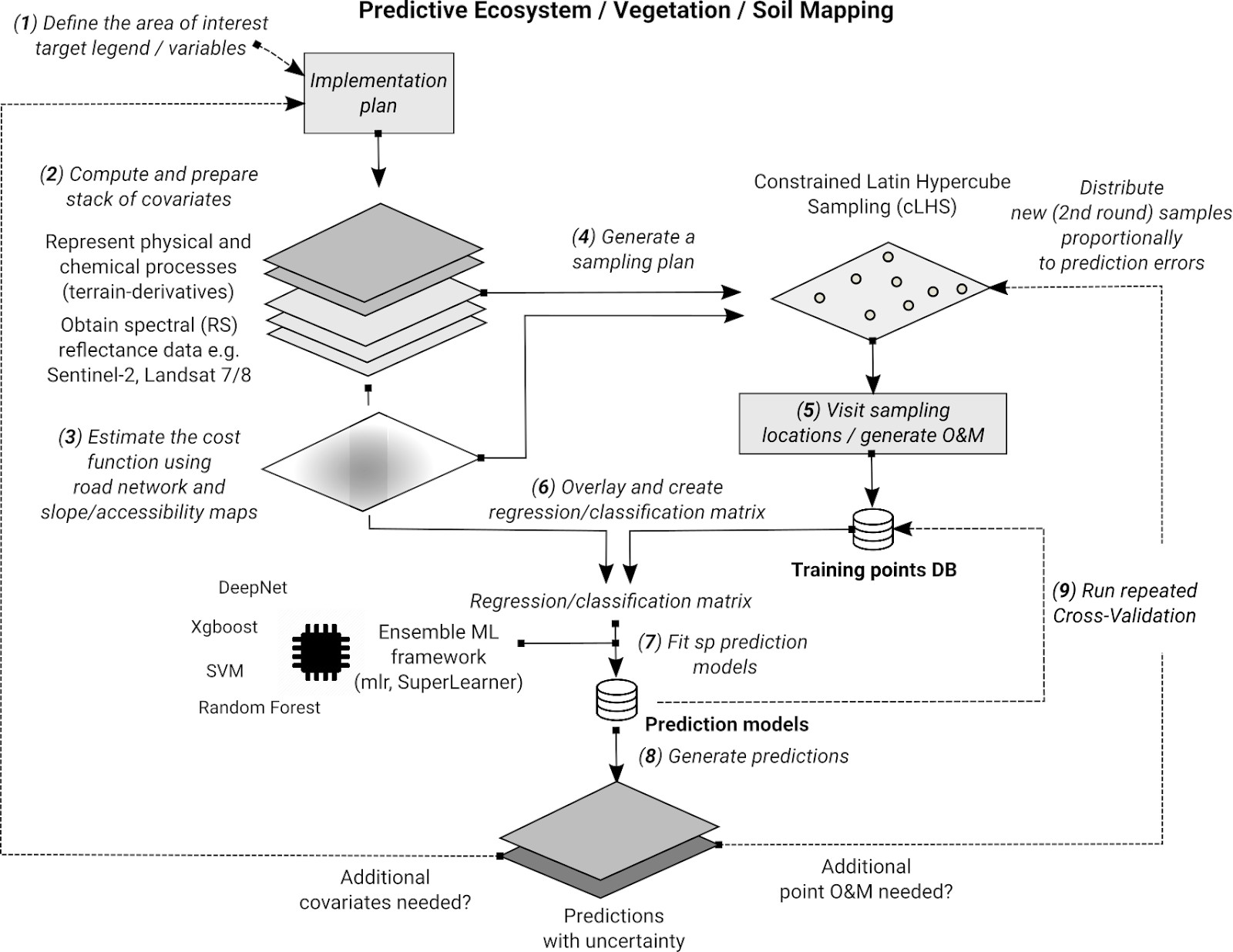

Data harmonization/production using Machine Learning

We use state-of-the-art Machine Learning to help fill-in gaps in data, harmonize multisource data and produce ensemble estimates based on multiple data products of the same kind. Harmonization in the data science context is thus a series of processes of making the raw data more analysis and/or decision ready.

For prediction of the land cover classes we plan to use Ensemble Machine Learning framework (SuperLearner) as implemented in the package mlr (Bischl et al., 2016). For EML, we will use 3-4 standard learners including: random forest (ranger), extreme gradient boosting (XGboost) and deep learning (deepnet). We will first produce a series of cloud-free mosaics with 25% quantile and IQR for key bands (Landsat /Sentinel) for classification purposes, then prepare training points, develop local case studies and run series of classification accuracy tests.

| # | Title | Description | Input variables | Outputs | Example of use |

| 1 | HPC spatial overlay | Efficient scalable (running in parallel) functions for overlay (usually points/polygons over rasters) | Vector layers, list of rasters (usually Cloud-optimized mosaics) | Usually tabular values, either raw data or aggregates | Prepare training data for ML by overlaying points and large volume of rasters |

| 2 | HPC ML training | Run model training and fine-tuning, produce model ready for production | Regression matrix, initial model parameters | Production-ready models | Fit models that can be used to produce spatial predictions/maps |

| 3 | HPC spatial prediction | Run spatial prediction so that massive volumes of data can be produced cost-effectively | ML models, prediction locations, prediction parameters | Predictions usually as spatial layers Cloud Optimized GEOTIFFs | Run predictions of land cover classification or similar and produce a map for the whole of Europe |

| 4 | HPC cross-validation | Run cross-validation with refitting and produce accuracy metrics | ML models, target accuracy metrics | Performance measures and plots | Estimate average accuracy for a model across the whole domain of interest |

| 5 | Digital terrain modelling wrapper | Wrapper function to produce an ensemble DTM, derive hydrological and similar parameters | List of elevation data / spatial layers that are merged into a single layer | Final harmonized layer that is analysis-ready/layer quality | Digital terrain model which is analysis-ready |

| 6 | GeoTIFF mosaicking functions | Wrapper function to produce Cloud-optimized GeoTIFF | List of tiles or | Mosaic as Cloud Optimized GeoTIFF or similar | Combine all tiles into a single file/object that can be easily migrated |

| 7 | Produce process chain | Convert (translate) list of functions/a workflow into a process chain that can then be deployed | Input layers, functions and output layer names | Docker script or similar | Converts an R/Python script into a process chain (via docker or similar) |

| 8 | Harmonize environmental layers | Wrapper function to harmonize land cover, environmental, PNV layers to a single standard | An input layer, target legend, resolution, format | Output harmonized layers with exact legend, metadata etc. | Specific function for this project that can be possibly extended |

| 9 | Publish data through a web-service | Wrapper function to publish/update produced layers | Input layer, styling, |

Development principles for web-interfaces

Users and clients require a simple interface to access open information about their area-of-interest, such as farms, land or properties. To achieve this, we will develop a user-oriented web interface in the form of a dashboard (based on Javascript, Semantic UI library and React, Angular, VueJS framework or similar), then test and improve it based on user satisfaction/user experience. Besides, we aim to use state-of-the art technology for importing, processing (Goßwein et al., 2019), data mining and visualization, which will include:

- High Performance Computing systems to speed up processing of large data (implemented e.g. through Copernicus Data and Information Access Services — DIAS or similar),

- Data mining and data science solutions based on Machine Learning, Artificial Neural Networks and automated mapping (Casalicchio, 2017),

- Open source solutions for cloud-based Databases and Web Processing Services (OGC WPS/API),

- 3D GIS and virtual/augmented reality technology to allow for diversity of visualization options,

- REST services with Open Archives Initiative Protocol for Metadata Harvesting.

Data and storage model

The general idea is that all datasets produced in this project are delivered as both (1) online database solutions, and (2) data extracts (file). For online database solutions, we will consider using traditional structured object-oriented database system (PostgreSQL, with the PostGIS extension, Hsu, 2015) that have efficient indexing systems (e.g. spatial indexes) and allow for easy spatial retrieval and are Open Geospatial Consortium (OGC) compatible. Other Cloud-based DB solutions such as Amazon Relational Database Service (RDS), CouchDB, MongoDB and/or Google Cloud Databases / Cloud SQL are more attractive because they are fully scalable and do not require maintenance from the producer’s / publisher’s side. We plan to continuously run comparisons and then choose even 1–2 parallel solutions that are more in the spirit of the status of technology.

For data extracts (files) we aim at using:

- Cloud-Optimized GeoTIFFs (https://www.cogeo.org/): for all regular / gridded data, and

- GeoPackage (https://gdal.org/drivers/vector/gpkg.html) and/or FlatGeobuf (https://gdal.org/drivers/vector/flatgeobuf.html): for all/selected vector data,,

Target data models:

- Tabular / point data: PostGIS / OGC OM (https://www.opengeospatial.org/standards/om),

- Polygon data: PostGIS (INSPIRE examples) / FlatGeobuf,

- Gridded/raster data (Cloud Optimized GeoTIFF; see GDAL COG driver),

- Time-series raster data: original as COG list, then imported into GRASS GIS (TGRASS),

- Time-series vector data: original as FlatGeobuf, then imported into GRASS GIS (TGRASS),

Use of CO-GeoTIFFs and FlatGeobuf is important because:

- Reading and writing of data is optimized i.e. usually much faster than using other GDAL drivers (see a comparison for FlatGeobuf at https://github.com/bjornharrtell/flatgeobuf),

- Spatial queries (point overlay and similar) are optimized especially in the CO-GeoTIFFs,

- Visualization of data in web-mapping solutions is optimized,

Geographical area of interest

As a general objective we will produce all gridded data at standard resolutions and using standard grid definition (bounding box, etc.) to match all other products:

- Spatial domain of interest: “the Geo-harmonizer region” which is “Continental EU” hence EEA (European Economic Area) and the United Kingdom, Switzerland, Serbia, Bosnia and Herzegovina, Montenegro, Kosovo, North Macedonia and Albania.

- Projection system: https://epsg.io/3035 (equal-area)

- Standard pixel sizes: (10 m) 30 m, 100 m, 250 m, 1 km and similar,

- Corresponding data standards: EU Inspire (https://inspire.ec.europa.eu/), GISCO and OGC standards.

We will consistently use the EPSG:3035 projection system, which is an equal-area system and which hence allows for derivation of densities and total areas. We will try to follow as much as possible, for better compatibility, data and storage models used by the CORINE land cover products (Büttner, 2014). We will develop own coverage based on the continental EU.

For parallelization of the processing and to make download of data easier, we will consistently use the European Grid system from GISCO: GEOSTAT which splits EU population units into regular 1 km grid. This system, however, has to be extended to other countries outside of the EEA, and also generalized to coarser resolutions (5 km, 10 km, 50 km).

Design of the data portal functionality and main workflows

The following two systems are used as models for the Geo-harmonizer EU data portal:

- OpenLandMap.org (original data portal of the OpenGeoHub foundation),

- The USGS The National Map Data Download and Visualization Services (TNM),

The Geo-harmonizer EU data portal (continental EU) will have the majority of original elements and we aim at making an irreplaceable website for browsing dynamic land cover / environmental data and running harmonization of data.

Accessibility

All new added-value maps will be published under the Open Data license, specifically the Open Data Commons Open Database License (ODbL) and/or Creative Commons Attribution-ShareAlike 4.0 International license (CC BY-SA). As such, we will closely follow the open data license policy of the OpenStreetMap Foundation.

National support

The Geo-harmonizer project will follow EN 301 549 standard of Functional Accessibility Requirements (EU directive on the accessibility of public sector websites and mobile applications). All partners of the consortium have received strong support from the ministries implementing the objectives of the CEF Telecom program within national and European strategies, through the letters of support. Availability and access to harmonised, seamless well-documented datasets, products and services relevant to the environment protection and sustainability is a long-standing expressed requirement, addressed in various ways: legislative (ex. INSPIRE Directive), technical (ex. European Open Data Portal), financial (ex. European Territorial Cooperation). The Geo-harmonizer answers complex technical and scientific issues that extend and improve national functionalities related to environmental characteristics.

At a national level, the project brings substantial contribution to the Open Data strategies, as it will develop an improved EU version of the OpenStreetMap (open data) created through automated fusion of OpenStreetMap and national maps (land cover etc). In Romania, for example, the National Strategy on the Digital Agenda for Romania 2020 is supporting strategic developments for the development of open applications using open standards, as well as capacities of improving the existing open datasets. On the other hand, for the federal public administrations, such as Germany, homogenous and seamless datasets offering climate and weather data are of great value as well. In this partner country, the geodata sovereignty is exercised by the countries, therefore geodata are often provided in various formats, time windows and spatial resolutions.

The project consortium has also received strong support from the Romanian Space Agency. Their interest is in the results of the implementation of an EU seamless web-based integration of the various geospatial datasets considered: INSPIRE data, Copernicus, OpenStreetMap, EUROSTAT etc. The agency is also interested in the technical results of implementing geospatial Open Source software solutions with state-of-the-art Machine/Deep Learning algorithms. The Machine Learning component of the initiative will accelerate the development of comprehensive results of the organizations/beneficiaries.

Impacts

With this project deliverables we aim at the following major target groups:

- environmental scientists and GIS specialists,

- decision-makers and government agencies (e.g. European Environmental Agency, DG Regio, national ministries, national environmental agencies),

- non-professionals (wider public via the EU open data portal),

We do not only aim at making a harmonization service, but we also intend to demonstrate that the system is operational by producing a list of new, added-value, pan-EU data sets including seamless EU land use/land cover, environmental quality indicators and “OpenStreetMap+” (improved EU version of the OpenStreetMap; which will be based on upscaling the method of Schultz et al. 2018) that would directly aim at supporting DG Regio and similar organizations local and national authorities working on cross-border projects, European Environmental Agency, JRC, national ministries of environment, agriculture, forestry or water management across EU member states.

General dissemination & user engagement strategy

The overall objectives of activity 6 and 7 are to establish clear and effective dissemination channels for the aims of the action and to aid the development of the action’s deliverables through user engagement, as well as promote/increase usage and usability of the action’s outputs. Higher engagement can be achieved through:

- Establishing concrete use-cases where the Geo-harmonizer’s data and software products are used to improve e.g. (a) decision making, (b) production of spatial plans, (c) saving costs, (d) preventing from natural hazards.

- Measuring and tracking usage of data and software via web-traffic / web-usage and through user feedback: corresponding directly to request and providing support help network with concrete users and then can lead to new developments.

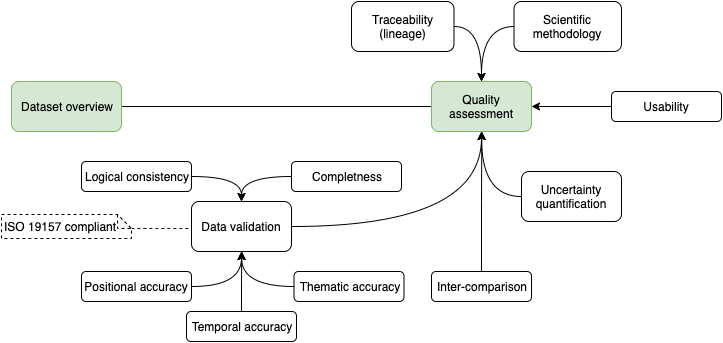

Quality assessment of the new produced data layers

The quality assessment of the Geo-harmonizer map products represents a crucial section of the production workflow; it will be executed for both input and output datasets. For consistency, its design will follow a set of standardized guidelines that will result in quality assessment reports compliant with international geospatial data quality standards (e.g. ISO 19157). Given the fact that within the Geo-harmonizer project there are no allocated funds for field campaigns to collect in-situ measurements to validate the results obtained, for each developed added value map product, the data validation (Loew, A., et al., 2017) will be achieved through 2 main methods: (1) identification of pre-existing datasets that can be considered as ground truth (datasets from official sources, such as the European Open Data Portal) and (2) cross-validation. Efforts will be invested in developing processing chains that will allow automatic update of the quality assessment following the update cycle of the map products. Fig. IP.4 illustrates the main sections of the quality assessment reports.

- Traceability (lineage) (non-quantitative information) — provenance of data source(s) used, including versions of the dataset

- Scientific methodology (non-quantitative information) — description of the methodology used in the development of the map product (preferably with indication to a peer-reviewed paper)

- Usability (non-quantitative information) — includes fitness for purpose that will be considered from use cases developed within the project and known limitations of the map products.

- Uncertainty quantification — the method used will be highly dependent on the map product in question

- Inter-comparison — a section dedicated to the results of comparing the map products developed within the Geo-harmonizer and the relevant datasets available, with respect to coverage, time series length, spatial/temporal resolution, level of automatization etc.

- Data validation — comprise of the following elements (as defined per ISO 19157) presented in Table IP.2 , it is intrinsically dependent on each Geo-harmonizer map product specifications.

| Element | Sub-element | Description |

| Completeness | Commission | excess data present in the dataset |

| Omission | data absent from a dataset | |

| Logical consistency | Conceptual consistency | how well a dataset adheres to the rules of its conceptual schema |

| Domain consistency | how well values adhere to their value domains | |

| Format consistency | the degree to which data is stored in accordance with the claimed physical structure of the dataset | |

| Positional accuracy | Absolute or external accuracy | the closeness of reported coordinate values to values accepted as or being true |

| Relative or internal accuracy | the closeness of the relative positions of features within a dataset | |

| Gridded data position accuracy | the closeness of a gridded data position values to values accepted as or being true | |

| Temporal accuracy | Accuracy of a time measurement | the correctness of the temporal references of an item |

| Temporal accuracy | the correctness of ordered events or sequences | |

| Temporal consistency | the validity of data with respect to time | |

| Thematic accuracy | Classification correctness | comparison of the characteristics assigned to features or their attributes to a universe of discourse |

| Non-quantitative attribute correctness | the correctness of non-quantitative attributes | |

| Usability | Specific quantitative information about a dataset’s suitability for a particular application |

Sustainability

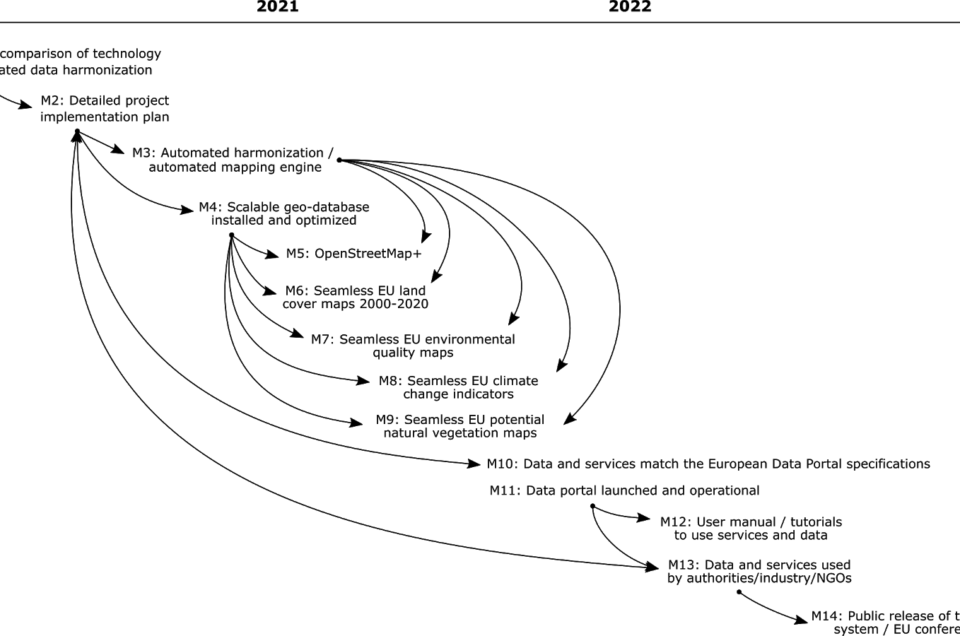

Sustainability and long-term success are key aspects of the majority of projects of all companies in this consortium. To ensure sustainability of the Geo-harmonizer, already at the end of the 1.5y of the project, we plan a deployment of some systems, e.g. the harmonization engine, “OpenStreetMap+”, seamless EU land cover maps 2000–2020, environmental quality maps, climate change indicators, potential natural vegetation maps (Hengle tal., 2019) and the software solutions. This means that the majority of deliverables should be available for testing and improvements much before the end of the contract.

Users will be encouraged to use the services because especially the new value-added data would be of high quality/decision-ready and hence fit to support real-life applications. We also plan to extensively use social networks, workshops, summer schools and conferences to promote Geo-harmonizer and raise awareness of the functionality. We are also planning to publish freely available online detailed technical documentation to allow further development and reuse of these data and services also in the sense of automated deployment.

Geo-harmonizer has two typical types of results: new added-value datasets and open source software developments (Coetzee, 2020). Additionally, Geo-harmoniser solves a stringent issue by deploying its generic services and its database on a cloud, preferably some of the DIAS infrastructures or similar. The use cases will address all indicated components.

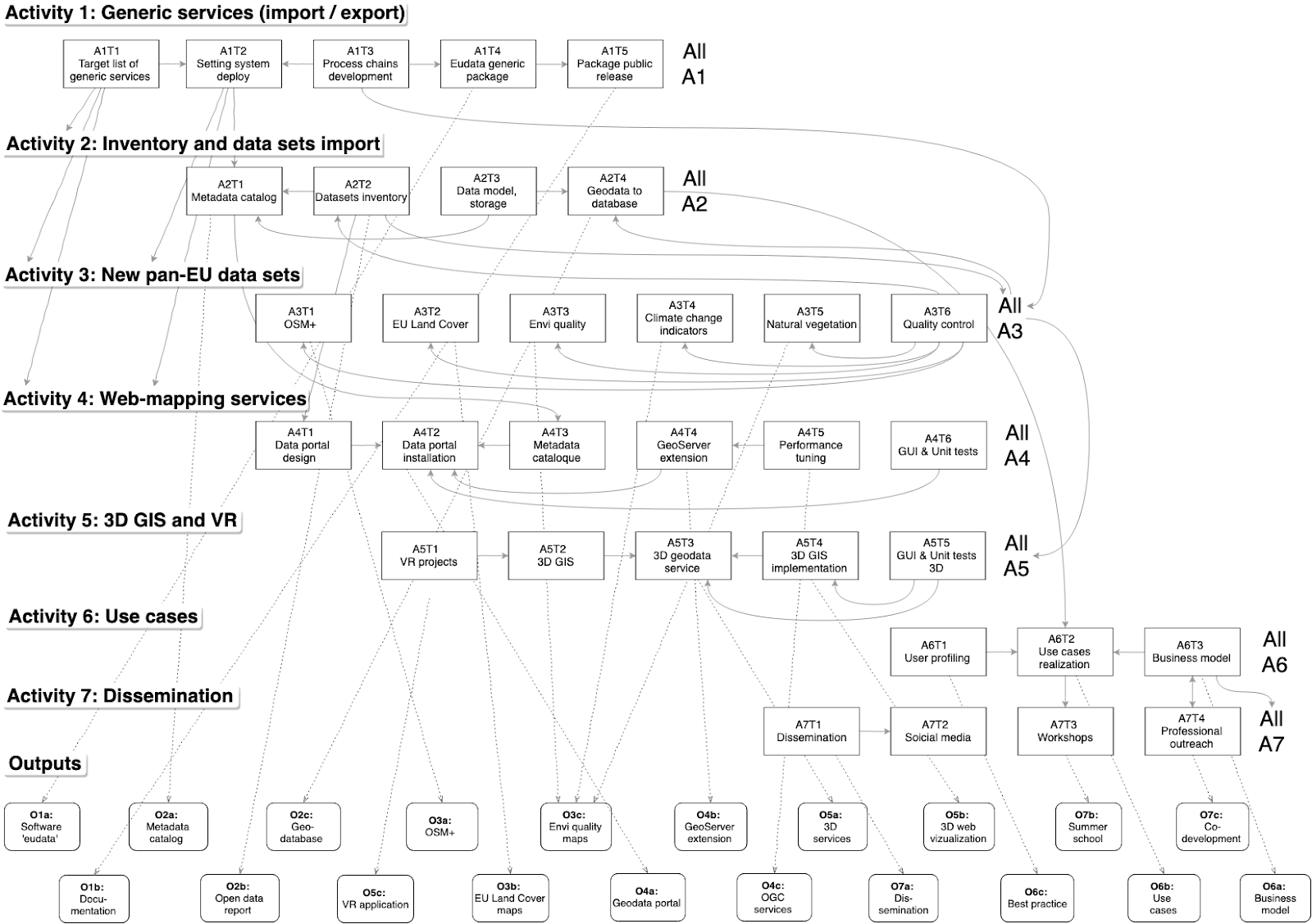

Activities and tasks

The project runs under 7 connected activities:

- Generic services for data import harmonization and export.

- Inventory and import of existing data sets (INSPIRE, Copernicus, EEA).

- New added-value pan-EU data sets.

- Web-mapping services/data portal.

- 3D GIS and virtual and augmented reality solutions.

- Use cases for long-term sustainability of open data & services.

- Dissemination & user engagement.

Activities are led by 1–2 beneficiaries, who are in principle responsible for all tasks and deliverables (outputs) within their activity, see Table 3.

| Activity | Activity title | Dates | Task | Task title | Task leader | Task partners |

| 1 | Generic services for data import harmonization and export | 1.9.2019 – 30.9.2021 | 1 | Target list of generic services and implementation plan | OpenGeoHub | CTU, MultiOne, mundialis, (Terrasigna) |

| 2 | Setting up of a system deploy chain and automated testing framework | mundialis | MultiOne, CTU | |||

| 3 | Development of process chains | MultiOne | CTU, mundialis | |||

| 4 | Production and testing of the generic package | CTU | MultiOne, OpenGeoHub | |||

| 5 | Public release of the package and user feedback | OpenGeoHub | MultiOne | |||

| 2 | Inventory and import of existing data sets (INSPIRE, Copernicus, EEA) | 1.9.2019 – 30.9.2021 | 1 | Setup and configure metadata-catalogue and metadata-profiles | mundialis | OpenGeoHub |

| 2 | Inventory and evaluate data sources due to their usability within the project | CTU | mundialis, OpenGeoHub | |||

| 3 | Development of a data model and data storage concept | OpenGeoHub | CTU, MultiOne, mundialis | |||

| 4 | Import and/or integrate the identified geodata with process chains into database | mundialis | CTU, MultiOne | |||

| 3 | New added value pan-EU data sets | 1.4.2020 – 30.6.2022 | 1 | Production of the OpenStreetMap+ | OpenGeoHub | Terrasigna, mundialis |

| 2 | Production of the EU land cover maps 2000-2020 | CTU | OpenGeoHub, Terrasigna | |||

| 3 | Production of the environmental quality maps | OpenGeoHub | Terrasigna, CTU | |||

| 4 | Production of the climate change indicators | Terrasigna | mundialis, OpenGeoHub | |||

| 5 | Production of potential natural vegetation maps | OpenGeoHub | Terrasigna, mundialis | |||

| 6 | Quality control | Terrasigna | CTU | |||

| 4 | Web-mapping services / data portal | 1.1.2021 – 31.12.2021 | 1 | Design of the data portal functionality and main workflows | OpenGeoHub | CTU, mundialis, (Terrasigna, Multione) |

| 2 | Implementation and installation of the data portal | OpenGeoHub | mundialis, CTU | |||

| 3 | Integration of the metadata catalogue (setup in activity 2) into the portal | mundialis | OpenGeoHub | |||

| 4 | Implementation of a GeoServer extension to automate the import of data | mundialis | CTU | |||

| 5 | Setting up and performance tuning of the OGC services | CTU | mundialis | |||

| 6 | Implementation of GUI- and Unit tests on the dataportal and it’s backend-services | OpenGeoHub | CTU, mundialis | |||

| 5 | 3D GIS and virtual and augmented reality solution | 1.1.2021 – 31.12.2021 | 1 | Research on existing projects using VR, AR applications for our purpose | CTU | – |

| 2 | Testing of the various 3D GIS, VR and AR workflows | CTU | Terrasigna + mundialis (3D), CTU (VR/AR) | |||

| 3 | Development and installation of standardized 3D-geodata-services + VR/AR prototype app | Terrasigna | CTU (VR/AR), mundialis (3D) | |||

| 4 | Prototype of 3D GIS implementation into the Geo-harmoniser | Terrasigna | mundialis | |||

| 5 | Implementation of GUI- and Unit tests on the 3D clients and 3D services | Terrasigna | CTU (VR/AR), mundialis (3D) | |||

| 6 | Use cases for long-term sustainability of open data & services | 1.9.2019 – 30.5.2022 | 1 | User profiling and initial use case development | Terrasigna | OpenGeoHub, CTU, mundialis |

| 2 | Preparation and realization of the selected use cases (prototypes) | CTU | Terrasigna, OpenGeoHub, mundialis | |||

| 3 | Business model enabling long-term sustainability of open data and services | Terrasigna | OpenGeoHub, mundialis | |||

| 7 | Dissemination & user engagement | 1.7.2020 – 30.6.2022 | 1 | Detailed dissemination & user engagement strategy | Terrasigna | OpenGeoHub |

| 2 | Communication, social media and impact monitoring | Terrasigna | OpenGeoHub | |||

| 3 | Workshops & training | OpenGeoHub | Terrasigna, OpenGeoHub, CTU, mundialis | |||

| 4 | Professional outreach & use case co-development | mundialis | CTU, Terrasigna, OpenGeoHub |

References

- Ballabio, C., Lugato, E., Fernández-Ugalde, O., Orgiazzi, A., Jones, A., Borrelli, P., Montanarella, L. and Panagos, P., 2019. Mapping LUCAS topsoil chemical properties at European scale using Gaussian process regression. Geoderma, 355: 113912.

- Bieszczad, J., Shapiro, M., Entekhabi, D., Callender, D. R., Milloy, J., Sullivan, D., & Ueckermann, M. P. (2019, January). PODPAC: A Python Library for Automatic Geospatial Data Harmonization and Seamless Transition to Cloud-Based Processing. In 99th American Meteorological Society Annual Meeting. AMS.

- Bischl, B., Lang, M., Kotthoff, L., Schiffner, J., Richter, J., Studerus, E., … & Jones, Z. M. (2016). mlr: Machine Learning in R. The Journal of Machine Learning Research, 17(1), 5938-5942.

- Brocca, L., Filippucci, P., Hahn, S., Ciabatta, L., Massari, C., Camici, S., Schüller, L., Bojkov, B., and Wagner, W. (2019), SM2RAIN–ASCAT (2007–2018): global daily satellite rainfall data from ASCAT soil moisture observations, Earth Syst. Sci. Data, 11, 1583–1601, https://doi.org/10.5194/essd-11-1583-2019.

- Brocca, L., T. Moramarco, F. Melone, and W. Wagner (2013), A new method for rainfall estimation through soil moisture observations, Geophys. Res. Lett., 40, doi:10.1002/grl.50173.

- Brodeur, J.; Coetzee, S.; Danko, D.; Garcia, S.; Hjelmager, J., (2019). Geographic Information Metadata—An Outlook from the International Standardization Perspective. ISPRS Int. J. Geo-Inf., 8, 280.

- Büttner, G. (2014). CORINE land cover and land cover change products. In Land use and land cover mapping in Europe (pp. 55-74). Springer, Dordrecht.

- Casalicchio, G., Bossek, J., Lang, M., Kirchhoff, D., Kerschke, P., Hofner, B., Bischl, B. (2017). OpenML: An R package to connect to the machine learning platform OpenML. Computational Statistics, 1-15.

- Coetzee, S., Ivánová, I., Mitasova, H., & Brovelli, M. A. (2020). Open Geospatial Software and Data: A Review of the Current State and A Perspective into the Future. ISPRS International Journal of Geo-Information, 9(2), 90.

- European Space Agency (ESA), CCI Data Standards, (2018). Ref CCI-PRGM-EOPS-TN-13-0009, available online, last accessed on 22nd of February, 2020

- Gebbert, S., Leppelt, T., & Pebesma, E. (2019). A topology based spatio-temporal map algebra for big data analysis. Data, 4(2), 86.

- Goßwein, B., Miksa, T., Rauber, A., Wagner, W. (2019). Data identification and process monitoring for reproducible earth observation research, IEEE eScience 2019, DOI:10.1109/eScience.2019.00011

- Hengl, T., Walsh, M. G., Sanderman, J., Wheeler, I., Harrison, S. P., & Prentice, I. C. (2018). Global mapping of potential natural vegetation: an assessment of machine learning algorithms for estimating land potential. PeerJ, 6, e5457. https://peerj.com/articles/5457/

- Hsu, R. (2015). PostGIS in action. Manning Publications.

- ISO/TC 211, ISO 19157:2013, Geographic Information-Data Quality

- Lehto, L., Kähkönen, J., Oksanen, J., & Sarjakoski, T. (2019). Visualisation and analysis of multi-resolution raster geodata-sets in the cloud. Abstracts of the ICA, 1.

- Lizundia-Loiola J., Pettinari M. L., Chuvieco E., Storm T., Gómez-Dans J. (2018). ESA CCI ECV Fire Disturbance: Algorithm Theoretical Basis Document-MODIS, version 2.0. Fire_cci_D2.1.3_ATBD-MODIS_v2.0. Available at https://esa-fire-cci.org/documents.

- Loew, A., et al., (2017), Validation practices for satellite-based Earth observation data across communities, Rev. Geophys., 55, 779–817, doi:10.1002/2017RG000562.

- Merchant et al., (2017). Uncertainty information in climate data records from Earth observation, Earth Syst. Sci. Data Discuss., doi:10.5194/essd-2017-16

- Metz, M.; Andreo, V.; Neteler, M. A (2017). New Fully Gap-Free Time Series of Land Surface Temperature from MODIS LST Data. Remote Sens., 9, 1333.

- Neteler, M., & Mitasova, H. (2013). Open source GIS: a GRASS GIS approach (Vol. 689). Springer Science & Business Media.

- Netto, H. V., Lung, L. C., Correia, M., Luiz, A. F., & de Souza, L. M. S. (2017). State machine replication in containers managed by Kubernetes. Journal of Systems Architecture, 73, 53-59.

- Ronzhin, S., Folmer, E., Lemmens, R., Mellum, R., von Brasch, T. E., Martin, E., … & Latvala, P. (2019). Next generation of spatial data infrastructure: lessons from linked data implementations across Europe. International journal of spatial data infrastructures research, 14, 83-107.

- Růžička, J. (2016). Comparing speed of Web Map Service with GeoServer on ESRI Shapefile and PostGIS. Geoinformatics FCE CTU, 15(1), 3-9. https://ojs.cvut.cz/ojs/index.php/gi/article/view/3522

- San-Miguel-Ayanz J., De Rigo D., Caudullo G., Houston Durrant T., Mauri A. (2016). European atlas of forest tree species. Brussels: European Commission, Joint Research Centre.

- Schultz, M., Voss, J., Auer, M., Carter, S., & Zipf, A. (2017). Open land cover from OpenStreetMap and remote sensing. International journal of applied earth observation and geoinformation, 63, 206-213.

- Veeckman, C., Jedlička, K., De Paepe, D., Kozhukh, D., Kafka, Š., Colpaert, P., & Čerba, O. (2017). Geodata interoperability and harmonization in transport: a case study of open transport net. Open Geospatial Data, Software and Standards, 2(1), 1-11.

- Wiemann, S., & Bernard, L. (2016). Spatial data fusion in spatial data infrastructures using linked data. International Journal of Geographical Information Science, 30(4), 613-636.

![]()